Operationalizing Big Data LiDAR datasets across multiple workforces and domains require overcoming this functionality bottleneck. However, relying upon the creation of ever bigger workstations is not a scalable solution. While there has been some notable work in distributed computing, the majority of approaches are inherently problematic, as they rely upon the traditional consideration of these datasets as tiled entities.

Over the past two decades, remote sensing acquisition and processing has undergone a meteoric change, in terms of quality, versatility, and portability, as well as cost.

Around 2003, there was no real-time processing and no option for multi-sensor units. In contrast, today’s equipment is not just userfriendly but often has an integrated Global Positioning System (GPS).

Presently, there are many reliable terrestrial units under $20,000, most of which are either lightweight hand-held units or sufficiently compact to fit in a backpack. This generation of equipment has revolutionized not only equipment accessibility but spawned a dataset explosion.

Challenges & Opportunities

Previously, the problem was highcost equipment and logistical difficulties. Now that those have been overcome, the newer challenges are associated with data storage and management.

Storage is not just an issue of repository space but having sufficient RAM to enable processing without repeatedly accessing the hard-drive. Problematically, our ability to produce data has far outstripped our ability to host and manipulate it even on a high-end processor.

Furthermore, a lot of commercially viable tools long adopted by the industry cannot ingest and render the billions of points that may be acquired within a single scan. Ultimately, the data acquisition trajectory is only likely to steepen given the move to more national scans such as the 3DEP by the United States’ government and the inclusion of LiDAR like capabilities in the last four iPhone releases.

Photon LiDAR is yet another development to watch. While it has not yet to enter the market widely, it has the potential to bolster the ability to produce better point clouds but without consideration as to the data’s operationalization.

Operationalizing Big Data LiDAR datasets across multiple workforces and domains requires overcoming this functionality bottleneck. However, relying upon the creation of ever bigger workstations is not a scalable solution. While there has been some notable work in distributed computing, the majority of approaches are inherently problematic, as they rely upon the traditional consideration of these datasets as tiled entities.

While chunking data based on spatial location and a maximum dataset size worked well historically, those point clouds had data densities of only a few points per square meter, which meant that a tile’s spatial extent could span several kilometers. Consequently, spatial discontinuity at a tile’s edge was rarely a problem, as most features of interest could be easily contained within a single tile.

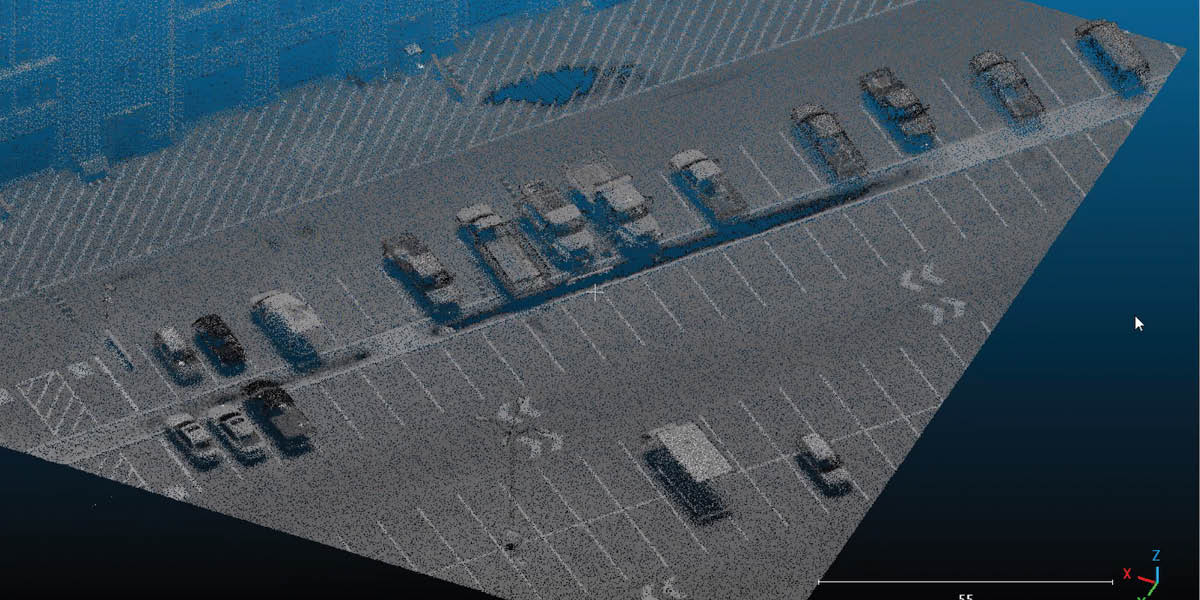

However, today’s datasets of more than a billion points per square kilometer necessitate either extreme degradation of the data, which defeats the purpose of high-resolution scanning, or vastly shrinking a tile’s spatial extent. Tiles with smaller spatial extents increase the probability that features of interest such as parking lots, buildings etc will cross multiple tiles.

This is quite problematic. To date, we lack a commercial or otherwise widely available solution to address LiDAR data management across multiple machines. This is especially true in a sharednothing distributed computing environment, which has been identified as the most promising direction for large-scale point cloud management.

Bespoke Approach

An alternative approach has been per-point processing and storage, where each point is considered independently of all others. This has been shown to work well for applications such as shadow casting and solar potential estimation. The approach has the advantages of being extremely parallelizable and providing the opportunity to store processed results directly with the point itself (both interim and final results), which allow those products to be more easily reusable.

However, per point processing contravenes the traditional mindset of neighborhood data grouping to inform both segmentation and object detection. So, in reality, per point storage and processing is best suited for a subset of applications related to line-of-site and situations where the need for those applications in known a priori, which is often not the case with general data collections, such as that which occurs in national scans.

Pressing Need

To overcome all of these difficulties, the community of practice should be further exploring the rich area of data indexing both as a native data storage mechanism (as has been done with approaches such as 3D r-trees, octrees, and k-d trees), but also as a one-time expense for data organization, in which neighbouring or proximal points can be stored more efficiently in the memory of a single desktop computer.

This approach has the potential to vastly extend the capabilities of existing hardware. The implications of such a strategy are significant as this would vastly reduce the hardware-based impediments to the exploitation of existing datasets and, in turn, enable many more individuals and organizations to more easily and effectively work with modern and future datasets.

Looking Forward

This data operationalization problem is critical not just with respect to increasing data densities, but with respect to the meta data affiliated with each data point. As an example, laser scanning data of 20 years ago generally had an x-, y-, and z-coordinate, and an intensity value.

Common commercial equipment now provides a red, green, and blue value taken from the integrated camera. Furthermore, high-end commercial equipment can know provide information such as multiple returns, the times of the output and return signals, the fullwave version of the return signals, the pulse of the outbound signal, and the location of the platform, at the moment of acquisition. Current photon laser scanners already record each photon return, as well with their affiliated energy level.

Critically, the next-generation datasets may include a wide range of other information from the atmospheric to the atomic depending upon future sensor integration. For example, if hyperspectral data were available, for each pixel there would be a radiance response for wavelength collected along the spectral range. This could add hundreds of pieces of data to each and every point in a point cloud. This would necessarily transform our gigabyte data to terabyte data and our terabyte data to petabyte data.

Before we become victims of our own success in data acquisition, we should really consider how we are going to work with these datasets so that we can benefit from them.