In 1999, before the turn of the new millennium, cinemagoers were captivated as Neo, a human who had been woken to the “real world”, took off his glasses and viewed the world as a digital representation made of glyphs. The Matrix was centered around the concept of a world similar to ours — a computer simulation that couldn’t be distinguished from actual reality. At the time, it was mindbending and hard to conceive that such a simulation could be built. Almost a quarter of a century later, we openly discuss digital twins of complete cities.

Digital twins didn’t just appear; they evolved. For hundreds of years, companies have built simulations and small-scale versions of full systems to test if certain aspects work, understand permissible parameters, or as urban designers and architects do, build small replicas of areas to see how a building might affect the surrounding aesthetic or light. Without years of small-scale testing and modeled simulations, we may have never landed a rocket on the moon. This testing and analysis hasn’t changed; it still occurs in almost every business. Today, it is more in-depth and is on a hard drive or a data center in the digital form and is accessible to every involved party. With an exponential increase in our ability to process, analyze, store, and model digital data, we are able to accurately replicate the world around us — from wind, water, and fire in the natural environment, to aerodynamics, electronics and even structural integrity in the engineering world. Our ability to create virtual worlds and simulations has become more real.

“The ultimate vision for the Digital Twin is to create, test and build our equipment in a virtual environment,” says John Vickers, NASA’s leading manufacturing expert and Manager of the agency’s Center for Advanced Manufacturing. “Only when we get it to where it performs to our requirements do we physically manufacture it. We then want that physical build to tie back to its Digital Twin through sensors, so that the Digital Twin contains all the information that we could have by inspecting the physical build,” adds Vickers.

The first accepted mention of a Digital Twin was in 2002 at the University of Michigan by Michael Grieve. The proposed model had three components: real space, virtual space, and a linking mechanism for the flow of data/information between the two. The model was then referred to as “Mirrored Spaces Model”. Although there was a large amount of interest, the technologies available at the time afforded no practical application — computing power was limited; there was little or no capable Internet; and there were limited number of ways to implement complex algorithms.

When we look at geospatial technology at the start of the millennium, there were only a couple of software capable of rendering 3D models, one of which was a breakthrough software from a company called Google, which offered an interactive 3D world globe. Internet speeds at that time were at best around 64 kbps to 500 kbps (if you were lucky). Most computers available for business were still running (at best) 500 Mhz Pentium III processors with 16-bit graphics.

With an exponential increase in our ability to process, analyze, store, and model digital data, we are able to accurately replicate the world around us

The turn of the century was a very exciting and confusing time. Businesses across the world were starting to see the benefits of investing in digitalization, and computers were no longer solely used for word processing, spreadsheets and pulling together reports, as they were in the late 1980s and 1990s. Businesses also saw the introduction of viable products from Autodesk and Esri, which meant that working digitally saved them time, space, and empowered the users with more advanced analyses. A viewshed went from being a week of cartographic excellence to an analysis that could be run and printed in a day.

By 2005, several national mapping agencies were either in the process of, or looking to, digitize their products. Relational databases were getting larger and more capable, and companies like Oracle were working with Esri to provide enterprise GIS systems capable of analyzing bigger data. This was the time when businesses started to see the value in geospatial data as more information was digitized.

In 2006, one of the catalysts for the geospatial revolution was not from the geospatial industry; it was the Guardian newspaper, which began a campaign called “Free our Data”. This campaign started calling for the raw data gathered by Ordnance Survey and the data gathered on its behalf by local authorities at public expense to be made freely available for reuse by individuals and companies, in the same way that data in the USA was collected.

The next five years changed everything. The geospatial industry started to get 3D capability, the open-source GIS movement started (including OpenStreetMap), and computers started to benefit from much more advanced Graphic Processing Units (GPUs) from Nvidia. A revolution had begun. The geospatial industry was now capturing data like never before, and thanks to the open-source movement, starting to share it. The number of connected devices surpassed the number of people on the planet, and connectivity was becoming available to start making use of those devices.

In the UK, thanks to the success of the “Free our Data” campaign, the authorities began creating Power of Information Taskforce Report, which would inform the government in 2009 of the benefits of opening data. That year, Gordon Brown, then Prime Minister, announced publicly that all Ordnance Survey mid-scale data would be available for free. In 2010, the national mapping agency released its first national open data, allowing a set of full UK vector datasets.

Around the same time, the UK government opened the data.gov.uk website, with the claim that it would “offer reams of public sector data, ranging from traffic statistics to crime figures, for private or commercial use”. This happened mere months after the US had set up its own site data. gov to provide a single site for open government data.

“I want Britain to be the world leader in the digital economy, which will create over a quarter of a million skilled jobs by 2020; the world leader in public service delivery where we can give the greatest possible voice and choice to citizens, parents, patients, and consumers; and the world leader in the new politics where that voice for feedback and deliberative decisions can transform the way we make local and national policies and decisions. Underpinning the digital transformation that we are likely to see over the coming decade is the creation of the next generation of the web — what is called the semantic web, or the web of linked data,” said Gordon Brown on March 22, 2010.

In the last decade, there has also been a huge advancement in 3D GIS and gaming engines to enable the use of 3D models vital to the rendering of the internal and external components for digital twins

Almost overnight, the geospatial industry went from struggling backroom cartographers and data scientists to holding the world in the digital form on their hard drives. Data was available everywhere and it didn’t take long to start putting the dots together and releasing the power of GIS by linking information together to generate more insights and results, with “mash-up” groups, geodata hackathons, and Open- StreetMap meetups occurring on a monthly basis. It was a perfect storm of breakthroughs that were occurring simultaneously — the Internet of Things (IoT) was exploding, the price of devices was decreasing, precision location devices were becoming more affordable, and the technology was improving rapidly. At the same time, Building Information Modeling (BIM) standards were being developed as computational and Cloud technologies were making everything possible. Furthermore, advancements in Business Intelligence meant dashboards and real-time information were starting to form the notion of smart cities. Advanced cities like Dubai and Abu Dhabi were already collecting 3D information (as well as internal building shapes) as part of their planning systems, so that it could be used when the technology was available.

In the last decade, there has also been a huge advancement in 3D GIS and gaming engines to enable the use of 3D models vital to the rendering of the internal and external components for these digital twins. Today, you just need to have a Google 3D city model and there are many different data available, whereas ten years ago, there were only a couple, and they were mostly digitized using photogrammetry. Now, there is a huge industry in 3D modeling, with many cities digitized in high detail 3D, available to download in gaming engine or GIS format for free. The city of Zurich is a great example of that.

As computers become more powerful and microchips become smaller, we will see more complex IoT sensors and the ability to consume more information

Due to the availability of sensors, data capture devices (of the imagery, survey, and seismic kind), connectivity like 5G, open data defining boundaries, topography, terrain and weather, as well as advanced Earth Observation information, Artificial Intelligence (AI) and Machine Learning (ML), it is easier to build a Digital Twin. This has certainly been the approach taken by the renewables, farming, insurance, oil and gas industries, as well as governments.

By using digital twins, the industry has the ability to consume data from the multitude of information from sensors, analyze trends, provide predictions using AI and ML, and then visualize this through dashboards, 3D, 4D, and even Virtual Reality, to ensure that the risks and potential damages are reduced to a minimum, if not zero. This not only provides a safe product or urban environment but also provides a very profitable and financially secure business proposition.

So, where do we go from here? Well, it is hard to speculate but what is certain is that as computers become more powerful and microchips become smaller, we will see more complex IoT sensors and the ability to consume more information. We will also be able to make sense of it through better technology, whether it be more engaging VR, AR, or interactive holographic TVs. Computer-aided Design (CAD), GIS, and gaming engines are already becoming more integrated, and it isn’t hard to see that in the near future there will be a single system that will consume all this data and apply it in a common standard. This will mean a reduction of silos between data scientists, BIM practitioners, GIS, CAD, 3D modelers, architects, environmental specialists, historic specialists, acoustic specialists, meteorological specialists, and even engineers.

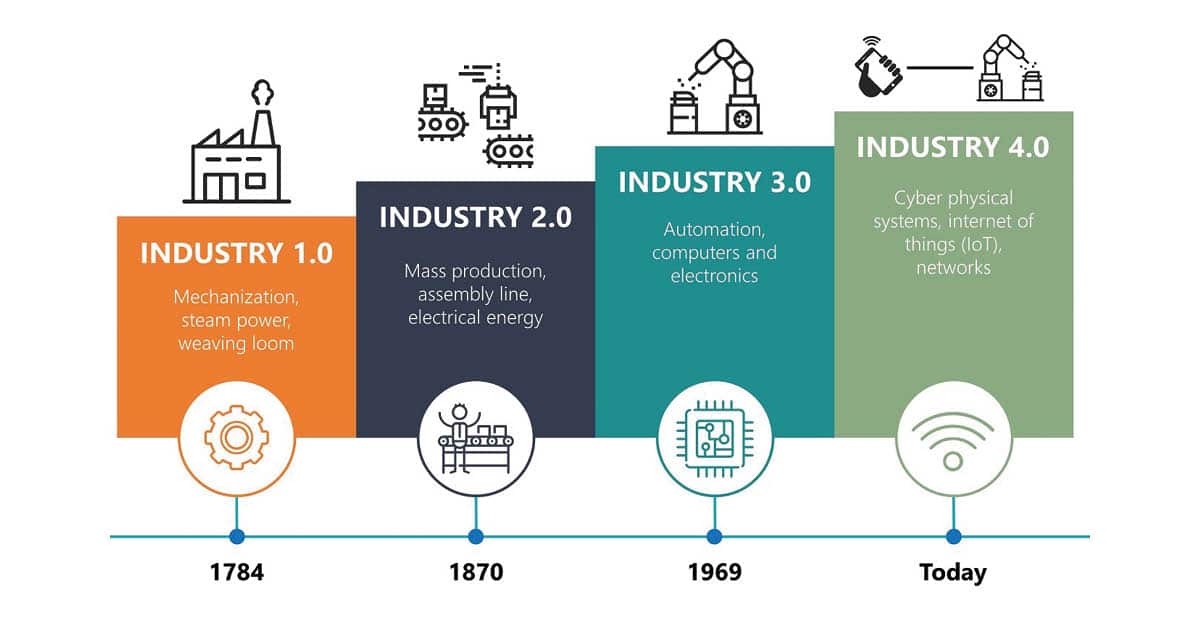

According to Statista, the number of IoT devices will reach 25.44 billion by 2030. This is almost triple of what is available today, according to data growth statistics. In the consumer segment, the primary use cases for the technology will be media devices like smartphones, smart grid, asset tracking and monitoring, autonomous vehicles, and IT infrastructure. Furthermore, the Handbook of Research on Cloud Infrastructures for Big Data Analytics claims that machine-generated data accounted for over 40% of Internet data in 2020, and the global machine language value will amount to $117.19 billion by the end of 2027. We are at the start of a data revolution that has enabled us to envisage smart cities and Digital twins. This is just the beginning of an industrial revolution that will change the world around us.

© Geospatial Media and Communications. All Rights Reserved.